Image matching and object recognition using SIFT keypoints.

More...

Image matching and object recognition using SIFT keypoints.

This directory contains a suite of classes that aim at being able to match images and recognize objects in them. The general inspiration comes from David Lowe's work at the University of British Columbia, Canada. Most of the implementation was created here by carefully reading his 2004 IJCV paper.

Some of the code here is also based on the Hugin panorama software

Given an image, a number of Scale-Invariant Feature Transform (SIFT) keypoints can be extracted. These keypoints mark the locations in the image which have pretty unique and distinctive local appearance; for example, the corner of a textured object, a letter, an eye, or a mouth. Many such keypoints exist in typical images, usually in the range of hundreds to thousands.

Given two images we can extract two lists of keypoints (class ScaleSpace, class Keypoint) and store them (class VisualObject, VisualObjectDB). We can then look for keypoints that have similar visual appearance between the two images (class KeypointMatch, class KDTree, VisualObjectMatch). Given a matching set of keypoints, we can try to recover the geometric transform that relates the first image to the second (class VisualObjectMatch).

This can be used to stitch two or more images together to form a mosaic or panorama. It can also be used to recognize attended locations as matching some known objects stored in an object database (see Neuro/Inferotemporal).

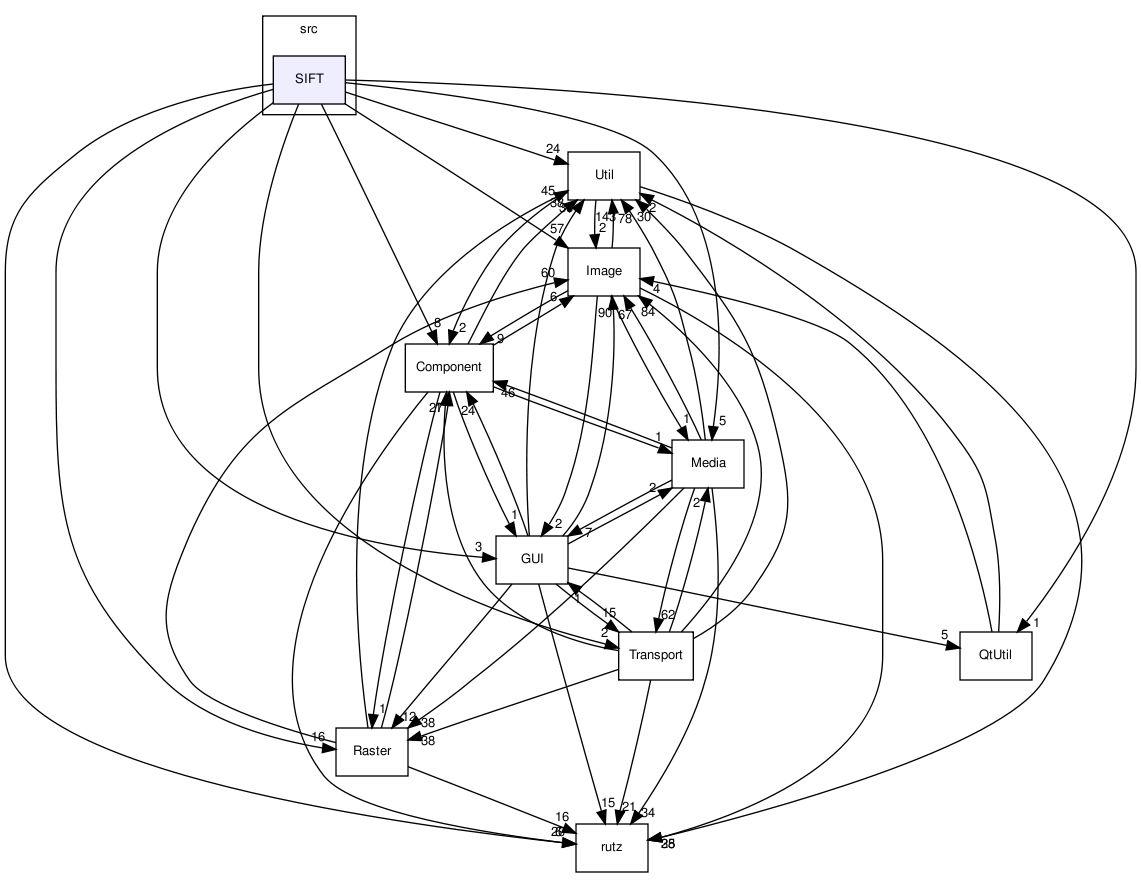

for dependency graphs: rankdir: TB

1.6.3

1.6.3