The field of Human-Robot interaction (HRI) encompasses a vast of research, from psychology, to reinforcement learning, to action recognition. For our project, we are particularly interested in interaction in outdoor environments, where communication with both collaborators (such as policemen and firemen in an emergency response situations) or victims has to be established.

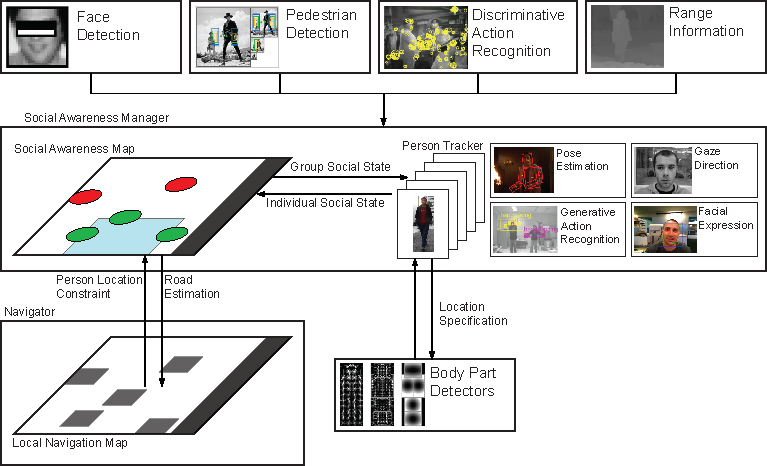

First, however, we need to create a real-time person recognition system, which is a challenge given the complexity in available recognition systems related to this topic. Fortunately, there are parts that are robust and real time. They are face and pedestrian detection. It is absolutely critical that the vision system detects the people around Beobot 2.0 efficiently and accurately. After that, the system can then continue and refine the information that we have about them, through processes such as pose, facial expression, gesture, and action recognition. This process is illustrated in the figure below

Target Application

In the future, our target scenarios are situations where the robot walks into an urban outdoor setting and has to:

- Recognize people

- Recognize people that are having difficulties

- Approach them

- Note if they notice the robot

- Recognize if they welcome the robot

Once the robot is in close proximity with the person, we can then go to another (natural language processing based) interaction system to ask them their current situation. For an outdoor robot, the main problem is the initial communication stage.

Our research in this field differ from the traditional HRI and vision-related fields in two ways:

- Most other interaction research communication with robot is already established and the subjects welcome interaction

- We do not try to recognize the person's posture or activity, whether the person is walking, etc. We try to directly recognize the situation, which include high level components, such as a person's level anxiety only from visual cues

To Do

We are currently implementing our social awareness infrastructure that can robustly recognize the people surrounding Beobot 2.0. Afterwards we can focus on the more detailed people information. We are also interested in utilizing information about group activities.

Another research focus that we have is in implementing a biological motion detector (much like a saliency map approach):

- This has to be real-time

- Be able to distinguish bio vs. non-bio motion. Should work even better in cases where people are trying to get your attention

- intentionality/ sense of purposefulness of motion, different than just a floating object.