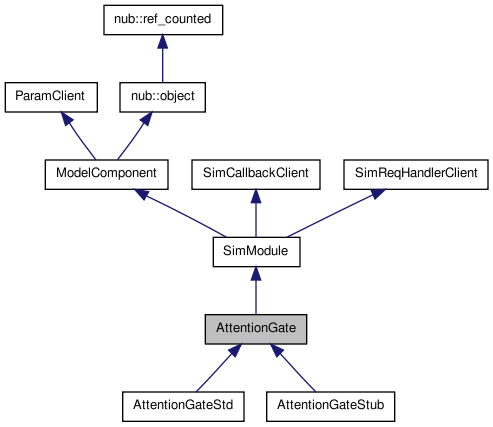

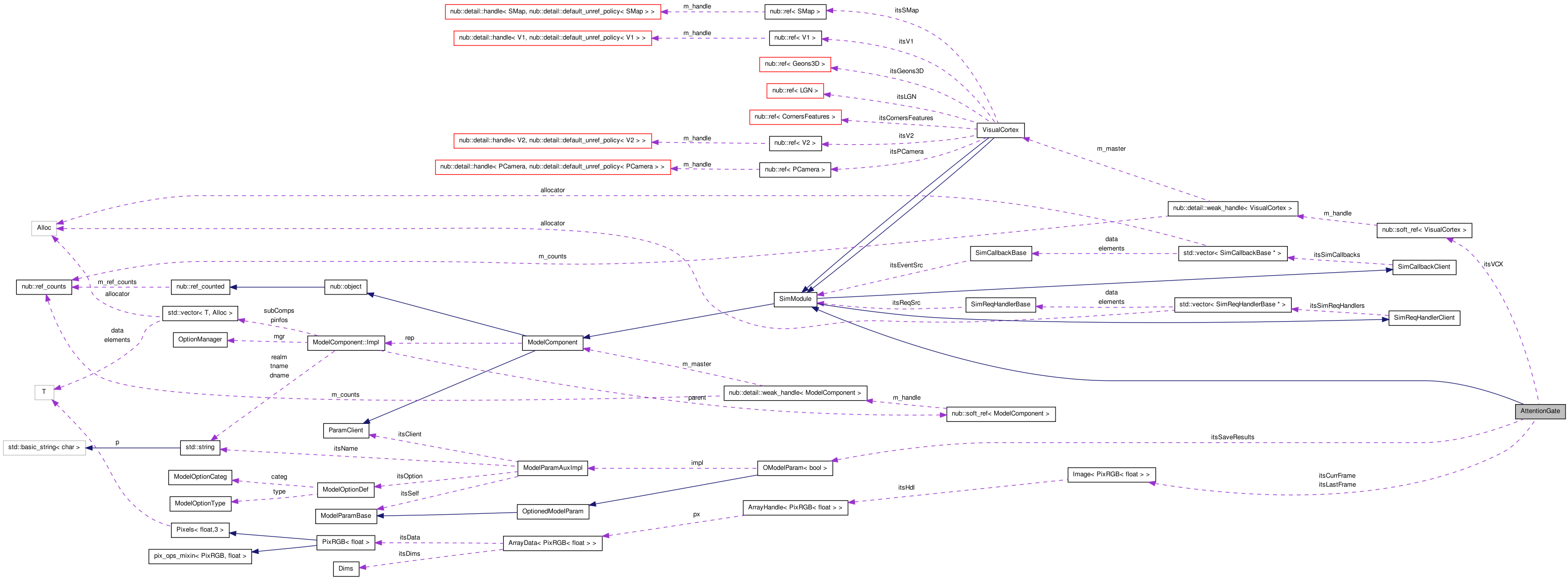

The Attention Gate Class. More...

#include <Neuro/AttentionGate.H>

Public Member Functions | |

Constructor, destructor | |

| AttentionGate (OptionManager &mgr, const std::string &descrName="Attention Gate Map", const std::string &tagName="AttentionGate", const nub::soft_ref< VisualCortex > vcx=nub::soft_ref< VisualCortex >()) | |

| Ininitialized constructor. | |

| virtual | ~AttentionGate () |

| Destructor. | |

Protected Attributes | |

| OModelParam< bool > | itsSaveResults |

| Save our internals when saveResults() is called? | |

| nub::soft_ref< VisualCortex > | itsVCX |

| unsigned short | itsSizeX |

| unsigned short | itsSizeY |

| unsigned int | itsFrameNumber |

| Image< PixRGB< float > > | itsLastFrame |

| Image< PixRGB< float > > | itsCurrFrame |

| float | itsLogSigO |

| float | itsLogSigS |

The Attention Gate Class.

This is a class that models the first and second stage of the two stage attention gating mechanism. The first stage decides what visual information will get through to the next stage. The second stage is not so much a gate as it is an integrator.

Stage One: The first stage must account for both attention blocking and attention capture. So for each frame, what parts of the image get through is effected by frames that come before as well as afterwards. So, in order for something to get through it must be more powerful than something that comes in the next image (Blocking) and more powerful than something that came before it (Capture).

Simple: The simple model uses the basic attention guidance map as the basis for blocking. This has the advantage of simplicity, but has the drawback that channels seem to act at different time scales and interact in special ways.

Complex: The complex model allows different channels to dwell for longer or shorter time intervals. For instance, it seems that color should have a strong short period of action, but that luminance and orientations have a longer but less pronounced dwell time. Also, orientation channels may have orthogonal interactions.

Stage Two: The second stage is an integrator. Images that come first in a series begin to assemble. If another image comes in with a set of similar features it aids in its coherence. However, if the first image is more coherent it will absorb the first image. As such a frame can block a frame that follows at 100-300 ms by absorbing it. If this happens the first image is enhanced by absorbing the second image. The second stage emulates the resonable expectation of visual flow in a sequence of images, but allows for non fluid items to burst through.

The AG is based on the outcome from our recent RSVP work as well as work by Sperling et al 2001 and Chun and Potter 1995.

Definition at line 106 of file AttentionGate.H.

| AttentionGate::AttentionGate | ( | OptionManager & | mgr, | |

| const std::string & | descrName = "Attention Gate Map", |

|||

| const std::string & | tagName = "AttentionGate", |

|||

| const nub::soft_ref< VisualCortex > | vcx = nub::soft_ref<VisualCortex>() | |||

| ) |

Ininitialized constructor.

The map will be resized and initialized the first time input() is called

Definition at line 61 of file AttentionGate.C.

| AttentionGate::~AttentionGate | ( | ) | [virtual] |

Destructor.

Definition at line 71 of file AttentionGate.C.

OModelParam<bool> AttentionGate::itsSaveResults [protected] |

Save our internals when saveResults() is called?

Definition at line 129 of file AttentionGate.H.

1.6.3

1.6.3