Table of Contents

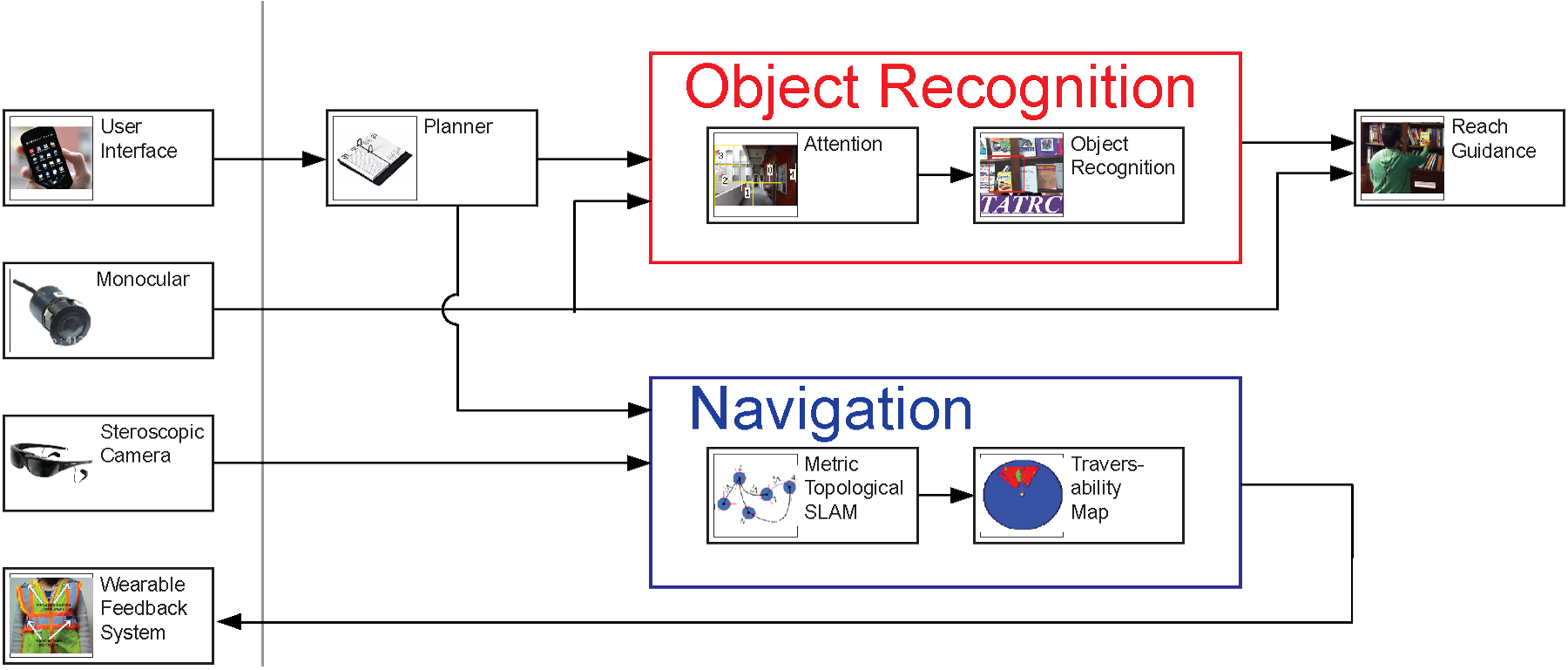

The VisualAid project is launched to develop a fully integrated visual aid device to allow the visually impaired users go to a location and find an object of interest. The system utilizes a number of state-of-the-art techniques such as SLAM (Simultaneous Localization and Mapping) and visual object detection. The device is designed to complement existing tools for navigation, such as seeing eye dogs and white canes.

The system incorporates the use of a Smartphone, Head Mounted Camera, Computer, and Vibro-tactile and Bone conduction Headphones.

Specifically, a user is able to choose from a number of tasks (“go somewhere”, “find the Cheerios”) through the Smartphone User interface. For our project, we start by focusing on the supermarket setting first. The head mounted camera then sees the outside world and pass it to the software. The vision algorithms then recognize the user whereabouts, and create a path to a goal location. Once the user arrives at the specified destination, the system then pinpoints the item(s) of importance to allow the user to grab it.

The system is comprised of a few sub-systems: the user interface, navigation, and object recognition and localization components.

People

| Name | Task | |

|---|---|---|

| 1. | Christian Siagian | Overall system architecture |

| 2. | Nii Mante | Navigation |

| 3. | Kavi Thakkor | Object Recognition |

| 4. | Jack Eagan | User Interface |

| 4. | James Weiland | |

| 5. | Laurent Itti |

Internal notes can be found here.

User's Manual

The user's manual can be found here.

System overview

User Interface

The user interface is used to obtain request from the user.

Navigation

The navigation system is used to guide the user to their destination.

Object Recognition And Localization

The object recognition and localization component is used to detect the requested object in the user field of view, and guide the user to reach and grab the object.

Links (Related Projects)

- Grozi: handheld scene text recognition system to recognize supermarket aisles. Also have object recognition to guide user to the object.

- OpenGlass: Google Glass-based system, which utilizes crowdsourcing to recognize objects or gain other information from pictures.

- EyeRing: Finger-worn visual assistant that provides a voice feedback describing features such as color of objects, currency values, and price tags.