Implementation of ``Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories'' by Lazebnik, et al. More...

#include "Neuro/GistEstimator.H"#include "SIFT/Keypoint.H"#include "Neuro/NeuroSimEvents.H"#include <ostream>#include <list>#include <string>

Go to the source code of this file.

Classes | |

| class | GistEstimatorBeyondBoF |

| Gist estimator for ``Beyond Bags of Features ...'' by Lazebnik, et al. More... | |

| struct | GistEstimatorBeyondBoF::SiftDescriptor |

Functions | |

| std::ostream & | operator<< (std::ostream &, const GistEstimatorBeyondBoF::SiftDescriptor &) |

Implementation of ``Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories'' by Lazebnik, et al.

The GistEstimatorBeyondBoF class implements the following paper within the INVT framework:

Lazebnik, S., Schmid, C., Ponce, J. Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Catgories CVPR, 2006.

In the paper, the authors describe the use of weak features (oriented edge points) and strong features (SIFT descriptors) as the basis for classifying images. In this implementation, however, we only concern ourselves with strong features clustered into 200 categories (i.e., the vocabulary size is 200 ``words''). Furthermore, we restrict the spatial pyramid used as part of the matching process to 2 levels.

We restrict ourselves to the above configuration because, as Lazebnik et al. report, it yields the best results (actually, a vocabulary size of 400 is better, but not by much).

To compute the gist vector for an image, we first divide the image into 16x16 pixel patches and compute SIFT descriptors for each of these patches. We then assign these descriptors to bins corresponding to the nearest of the 200 SIFT descriptors (vocabulary) gleaned from the training phase. This grid of SIFT descriptor indices is then converted into a feature map that specifies the grid coordinates for each of the 200 feature types. This map allows us to compute the multi-level histograms as described in the paper. The gist vectors we are interested in are simply the concatenation of all the multi-level histograms into a flat array of numbers.

Once we have these gist vectors, we can classify images using an SVM. The SVM kernel is the histogram intersection function, which takes the gist vectors for the input and training images and returns the sum of the minimums of each dimension (once again, see the paper for the gory details).

This class, viz., GistEstimatorBeyondBoF, only computes gist vectors (i.e., normalized multi-level histograms) given the 200 ``word'' vocabulary of SIFT descriptors to serve as the bins for the histograms. The actual training and classification must be performed by client programs. To assist with the training process, however, this class sports a training mode in which it does not require the SIFT descriptors database. Instead, in training mode, it simply returns the raw grid of SIFT descriptors for the images it is supplied. This allows clients to store those descriptors for clustering, etc.

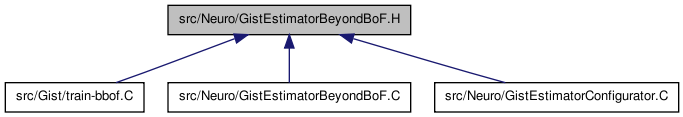

Definition in file GistEstimatorBeyondBoF.H.

1.6.3

1.6.3