An offline behaviour for rendering the results of the trajectory experiments. More...

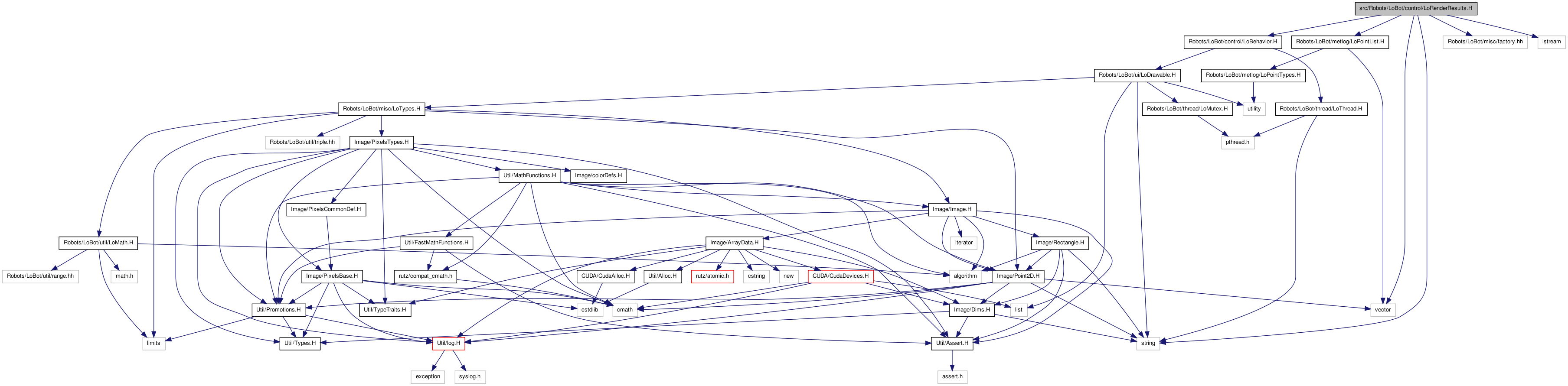

#include "Robots/LoBot/control/LoBehavior.H"#include "Robots/LoBot/metlog/LoPointList.H"#include "Robots/LoBot/misc/factory.hh"#include <istream>#include <vector>#include <string>

Go to the source code of this file.

Classes | |

| class | lobot::RenderResults |

| An offline behaviour for rendering the robot's trajectory from start to finish and the locations where its emergency stop and extrication behaviours were active. More... | |

| struct | lobot::RenderResults::Goal |

| A helper struct for holding a goal's bounds (in map coordinates). | |

An offline behaviour for rendering the results of the trajectory experiments.

The Robolocust project aims to use locusts for robot navigation. Specifically, the locust sports a visual interneuron known as the Lobula Giant Movement Detector (LGMD) that spikes preferentially in response to objects moving toward the animal on collisional trajectories. Robolocust's goal is to use an array of locusts, each looking in a different direction. As the robot moves, we expect to receive greater spiking activity from the locusts looking in the direction in which obstacles are approaching (or being approached) and use this information to veer the robot away.

Before we mount actual locusts on a robot, we would like to first simulate this LGMD-based navigation. Toward that end, we use a laser range finder (LRF) mounted on an iRobot Create driven by a quad-core mini-ITX computer. A computational model of the LGMD developed by Gabbiani, et al. takes the LRF distance readings and the Create's odometry as input and produces artificial LGMD spikes based on the time-to-impact of approaching objects. We simulate multiple virtual locusts by using different angular portions of the LRF's field of view. To simulate reality a little better, we inject Gaussian noise into the artificial spikes.

We have devised three different LGMD-based obstacle avoidance algorithms:

1. EMD: pairs of adjacent LGMD's are fed into Reichardt motion detectors to determine the dominant direction of spiking activity and steer the robot away from that direction;

2. VFF: a spike rate threshold is used to "convert" each virtual locust's spike into an attractive or repulsive virtual force; all the force vectors are combined to produce the final steering vector;

3. TTI: each locust's spike rate is fed into a Bayesian state estimator that computes the time-to-impact given a spike rate; these TTI estimates are then used to determine distances to approaching objects, thereby effecting the LGMD array's use as a kind of range sensor; the distances are compared against a threshold to produce attractive and repulsive forces, with the sum of the force field vectors determining the final steering direction.

We also implemented a very simple algorithm that just steers the robot towards the direction of least spiking activity. However, although it functioned reasonably well as an obstacle avoidance technique, it was found to be quite unsuitable for navigation tasks. Therefore, we did not pursue formal tests for this algorithm, focusing instead on the three algorithms mentioned above.

To evaluate the relative merits of the above algorithms, we designed a slalom course in an approximately 12'x6' enclosure. One end of this obstacle course was designated the start and the other end the goal. The robot's task was to drive autonomously from start to goal, keeping track of itself using Monte Carlo Localization. As it drove, it would collect trajectory and other pertinent information in a metrics log.

For each algorithm, we used four noise profiles: no noise, 25Hz Gaussian noise in the LGMD spikes, 50Hz, and 100Hz. For each noise profile, we conducted 25 individual runs. We refer to an individual run from start to goal as an "experiment" and a set of 25 experiments as a "dataset."

The lobot program, properly configured, was used to produce metrics log files for the individual experiments. The lomet program was used to parse these metlogs and produce information regarding the robot's average-case behaviour associated with a dataset. These files are referred to as results files.

The lobot::RenderResults class defined here implements an offline behaviour to read metlog and results files and visualize them on the map of the slalom enclosure. This behaviour is not meant to control the robot; rather, it is supposed to visualize the robot's trajectory from start to finish and plot the points where its emergency stop and extrication behaviours were active and those points where the robot bumped into things. As mentioned above, the point lists for the trajectory, emergency stop and extrication behaviours and bump locations comes from either metlog files produced by lobot or results files output by lomet.

The ultimate objective behind this visualization is to be able to collect screenshots that can then be presented as data in papers, theses, etc. We could have written Perl/Python scripts to process the metlog and results files and generate pstricks code for inclusion in LaTeX documents. However, since the lobot program already implements map visualization and screen capturing, it is easier to bootstrap off off that functionality to produce JPG/PNG images that can then be included by LaTeX.

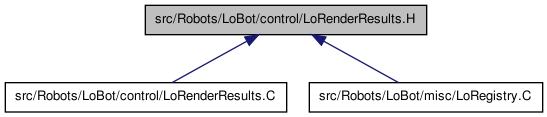

Definition in file LoRenderResults.H.

1.6.3

1.6.3