ScaleSpace is like a Gaussian pyramid but with several images per level. More...

#include <SIFT/ScaleSpace.H>

Public Types | |

| enum | ChannlesDef { LUM_CHANNEL = 0, RG_CHANNEL = 1, BY_CHANNEL = 2 } |

Public Member Functions | |

| ScaleSpace (const ImageSet< float > &in, const float octscale, const int s=3, const float sigma=1.6F, bool useColor=false) | |

| Constructor. | |

| ~ScaleSpace () | |

| Destructor. | |

| Image< float > | getTwoSigmaImage (int channel) const |

| Get the image blurred by 2*sigma. | |

| uint | findKeypoints (std::vector< rutz::shared_ptr< Keypoint > > &keypoints) |

| Find the keypoints in the previously provided picture. | |

| uint | getGridKeypoints (std::vector< rutz::shared_ptr< Keypoint > > &keypoints) |

| Get the keypoints in the previously provided picture. | |

| uint | getNumBlurredImages () const |

| Get number of blurred images. | |

| Image< float > | getBlurredImage (const uint idx) const |

| Get a blurred image. | |

| uint | getNumDoGImages () const |

| Get number of DoG images. | |

| Image< float > | getDoGImage (const uint idx) const |

| Get a DoG image. | |

| Image< float > | getKeypointImage (std::vector< rutz::shared_ptr< Keypoint > > &keypoints) |

| Get a keypoint image for saliency submap. | |

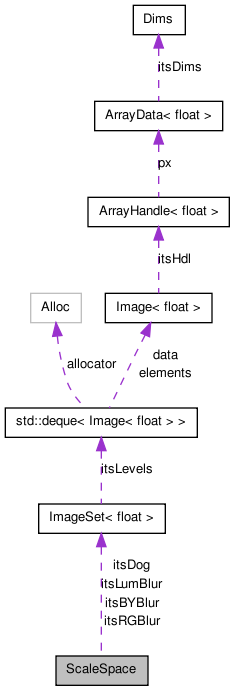

ScaleSpace is like a Gaussian pyramid but with several images per level.

ScaleSpace is a variation of a Gaussian pyramid, where we have several images convolved by increasingly large Gaussian filters for each level (pixel size). So, while in the standard dyadic Gaussian pyramid we convolve an image at a given resolution, then decimate by a factor 2 it to yield the next lower resolution, here we convolve at each resolution several times with increasingly large Gaussians and decimate only once every several images. This ScaleSpace class represents the several convolved images that are obtained for one resolution (one so-called octave). Additionally, ScaleSpace provides machinery for SIFT keypoint extraction. In the SIFT algorithm we use several ScaleSpace objects for several image resolutions.

Some of the code here based on the Hugin panorama software - see http://hugin.sourceforge.net - but substantial modifications have been made, espacially by carefully going over David Lowe's IJCV 2004 paper. Some of the code also comes from http://autopano.kolor.com/ but substantial debugging has also been made.

Definition at line 68 of file ScaleSpace.H.

| ScaleSpace::ScaleSpace | ( | const ImageSet< float > & | in, | |

| const float | octscale, | |||

| const int | s = 3, |

|||

| const float | sigma = 1.6F, |

|||

| bool | useColor = false | |||

| ) |

Constructor.

Take an input image and create a bunch of Gaussian-convolved versions of it as well as a bunch of DoG versions of it. We obtain the following images, given 's' images per octave and a baseline Gaussian sigma of 'sigma'. In all that follows, [*] represents the convolution operator.

We want that once we have convolved the image 's' times we end up with an images blurred by 2 * sigma. It is well known that convolving several times by Gaussians is equivalent to convolving once by a single Gaussian whose variance (sigma^2) is the sum of all the variances of the other Gaussians. It is easy to show that we then want to convolve each image (n >= 1) by a Gaussian of stdev std(n) = sigma(n-1) * sqrt(k*k - 1.0) where k=2^(1/s) and sigma(n) = sigma * k^n, which means that each time we multiply the total effective sigma by k. So, in summary, for every n >= 1, std(n) = sigma * k^(n-1) * sqrt(k*k - 1.0).

For our internal Gaussian imageset, we get s+3 images; the reason for that is that we want to have all the possible DoGs for that octave:

blur[ 0] = in [*] G(sigma) blur[ 1] = blur[0] [*] G(std(1)) = in [*] G(k*sigma) blur[ 2] = blur[1] [*] G(std(2)) = in [*] G(k^2*sigma) ... blur[ s] = blur[s-1] [*] G(std(s)) = in [*] G(k^s*sigma) = in[*]G(2*sigma) blur[s+1] = blur[s] [*] G(std(s+1)) = in [*] G(2*k*sigma) blur[s+2] = blur[s+1] [*] G(std(s+2)) = in [*] G(2*k^2*sigma)

For our internal DoG imageset, we just take differences between two adjacent images in the blurred imageset, yielding s+2 images such that:

dog[ 0] = in [*] (G(k*sigma) - G(sigma)) dog[ 1] = in [*] (G(k^2*sigma) - G(k*sigma)) dog[ 2] = in [*] (G(k^3*sigma) - G(k^2*sigma)) ... dog[ s] = in [*] (G(2*sigma) - G(k^(s-1)*sigma)) dog[s+1] = in [*] (G(2*k*sigma) - G(2*sigma))

The reason why we need to compute dog[s+1] is that to find keypoints we will look for extrema in the scalespace, which requires comparing the dog value at a given level (1 .. s) to the dog values at levels immediately above and immediately below.

To chain ScaleSpaces, you should construct a first one from your original image, after you have ensured that it has a blur of 'sigma'. Then do a getTwoSigmaImage(), decimate the result using decXY(), and construct your next ScaleSpace directly from that. Note that when you chain ScaleSpace objects, the effective blurring accelerates: the first ScaleSpace goes from sigma to 2.0*sigma, the second from 2.0*sigma to 4.0*sigma, and so on.

| in | the input image. | |

| octscale | baseline octave scale for this scalespace compared to the size of our original image. For example, if 'in' has been decimated by a factor 4 horizontally and vertically relatively to the original input image, octscale should be 4.0. This is used later on when assembling keypoint descriptors, so that we remember to scale the coordinates to the keypoints from our coordinates (computed in a possibly reduced input image) back to the coordinates of the original input image. | |

| s | number of Gaussian scales per octave. | |

| sigma | baseline Gaussian sigma to use. |

Definition at line 58 of file ScaleSpace.C.

References ASSERT, CONV_BOUNDARY_CLEAN, sepFilter(), sqrt(), and sum().

| ScaleSpace::~ScaleSpace | ( | ) |

Destructor.

Definition at line 117 of file ScaleSpace.C.

| uint ScaleSpace::findKeypoints | ( | std::vector< rutz::shared_ptr< Keypoint > > & | keypoints | ) |

Find the keypoints in the previously provided picture.

The ScaleSpace will be searched for good SIFT keypoints. Any keypoint found will be pushed to the back of the provided vector. Note that the vector is not cleared, so if you pass a non-empty vector, new keypoints will just be added to is. Returns the number of keypoints added to the vector of keypoints.

Definition at line 156 of file ScaleSpace.C.

References EXT, ImageSet< T >::size(), and ZEROS.

Referenced by VisualObject::computeKeypoints(), and SIFTChannel::doInput().

Get a blurred image.

Definition at line 144 of file ScaleSpace.C.

References ImageSet< T >::getImage().

| uint ScaleSpace::getGridKeypoints | ( | std::vector< rutz::shared_ptr< Keypoint > > & | keypoints | ) |

Get the keypoints in the previously provided picture.

The ScaleSpace will extract keypoints in a grid setup. Those keypoints will be pushed to the back of the provided vector. Note that the vector is not cleared, so if you pass a non-empty vector, new keypoints will just be added to it. Returns the number of keypoints added to the vector of keypoints.

Definition at line 271 of file ScaleSpace.C.

| Image< float > ScaleSpace::getKeypointImage | ( | std::vector< rutz::shared_ptr< Keypoint > > & | keypoints | ) |

Get a keypoint image for saliency submap.

Definition at line 818 of file ScaleSpace.C.

References drawDisk(), height, and ZEROS.

Referenced by SIFTChannel::doInput().

| uint ScaleSpace::getNumBlurredImages | ( | ) | const |

Get number of blurred images.

Definition at line 140 of file ScaleSpace.C.

References ImageSet< T >::size().

| uint ScaleSpace::getNumDoGImages | ( | ) | const |

Get number of DoG images.

Definition at line 148 of file ScaleSpace.C.

References ImageSet< T >::size().

| Image< float > ScaleSpace::getTwoSigmaImage | ( | int | channel | ) | const |

Get the image blurred by 2*sigma.

This image can then be downscaled and fed as input to the next ScaleSpace.

Definition at line 121 of file ScaleSpace.C.

References ASSERT, ImageSet< T >::getImage(), and ImageSet< T >::size().

Referenced by VisualObject::computeKeypoints().

1.6.3

1.6.3