This is an old revision of the document!

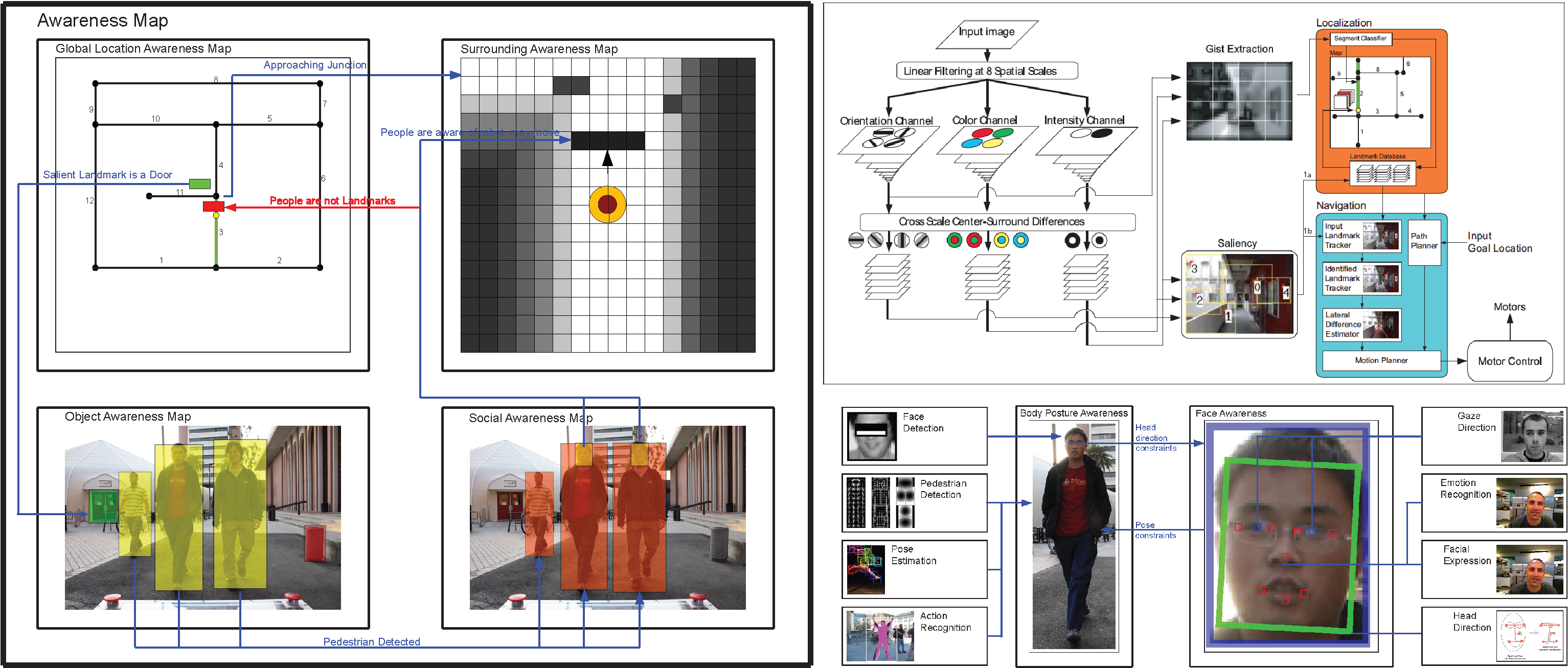

The goal of the software system is to create an autonomous mobile robotic system that can perform robustly in the unconstrained outdoor environments, particularly in the urban environment where there are many people walking about. It consists of high level vision algorithms that try to solve problems in vision localization, navigation, object recognition, and Human-Robot Interaction (HRI).

All of Beobot 2.0 code is freely available in our Vision Toolkit, in particular in the : src/Robots/Beobot2.0/ folder. In the toolkit we also provide other software such as such as microcontroller code to run the robot.

Note that the first 2 sections (Deadlines and To Do) are ongoing internal message boards. The public section starts at the Current Research section.

Deadlines

Past software accomplished deadlines can be found here.

| Tasks | Date | |

|---|---|---|

| 1. | ICRA2014: BeoRoadFinder: vision & tilted LRF | Sept 15, 2013 |

| 2. | IEEE AR 2013 road recognition comparison paper | Oct 31, 2013 |

| 3. | Implement Object search system | Dec 1, 2013 |

| 4. | RSS2014: Crowd navigation & understanding | Feb1, 2014 |

| 5. | Implement Human-Robot Interaction system | August 1, 2014 |

Current Research

The specific tasks that we are focusing on are:

- vision navigation system using salient regions. work notes.

- recognizing people and other target objects

- approaching and following people and other target objects

- real time human pose recognition and tracking that leads to better mobile Human Robot Interaction

Other projects:

- Vision based obstacle-avoidance emulating LGMD cells in locusts.

- Hierarchical Simultaneous Localization and Mapping

- Mobile robot exploration

Hierarchical Representation of the Robot's Environment

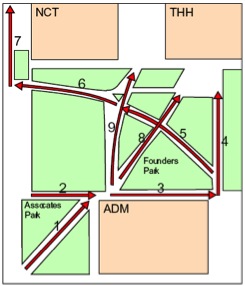

At the center of our mobile robotic system is the use of hierarchical representation of the robot's environment. We have a two level map: a global map for localization (how to recognize one's own location) and a local map for localization (how to to move about one's current environment, regardless if we know our exact location).

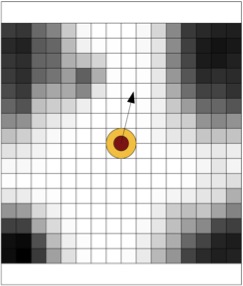

The global map (illustrated by the left image) is a graph-based augmented topological map, which is compact and scalable to localize large sized environments. On the other hand, for navigation, we utilize an ego-centric traditional grid occupancy map as local map (on the right), which details the dangers in the robot's immediate surrounding. Here the robot is denoted below by a circle with an arrow indicating the robot's heading.

It would be inefficient to use a grid map for global localization, as it is too large to maintain for large scale environments, but with little added information that is not in a topological map. We do not need to memorize every square foot of every hallway in the environment, we just need to know the one we are on. By using a local map that will not be committed to the long term storage (it is robot-centric and is updated as the robot moves), we have our desired overall mobile robot representation that is both compact (for scalability) and detailed (for accuracy).

Navigation

We use road recognition system to navigate.

Software Tools, Operating Systems Issues

The software tools related discussions can be found here. It includes firmware level issues such as low level computer communication.

Back to Beobot 2.0